- #How to use pycharm with anaconda install

- #How to use pycharm with anaconda windows 10

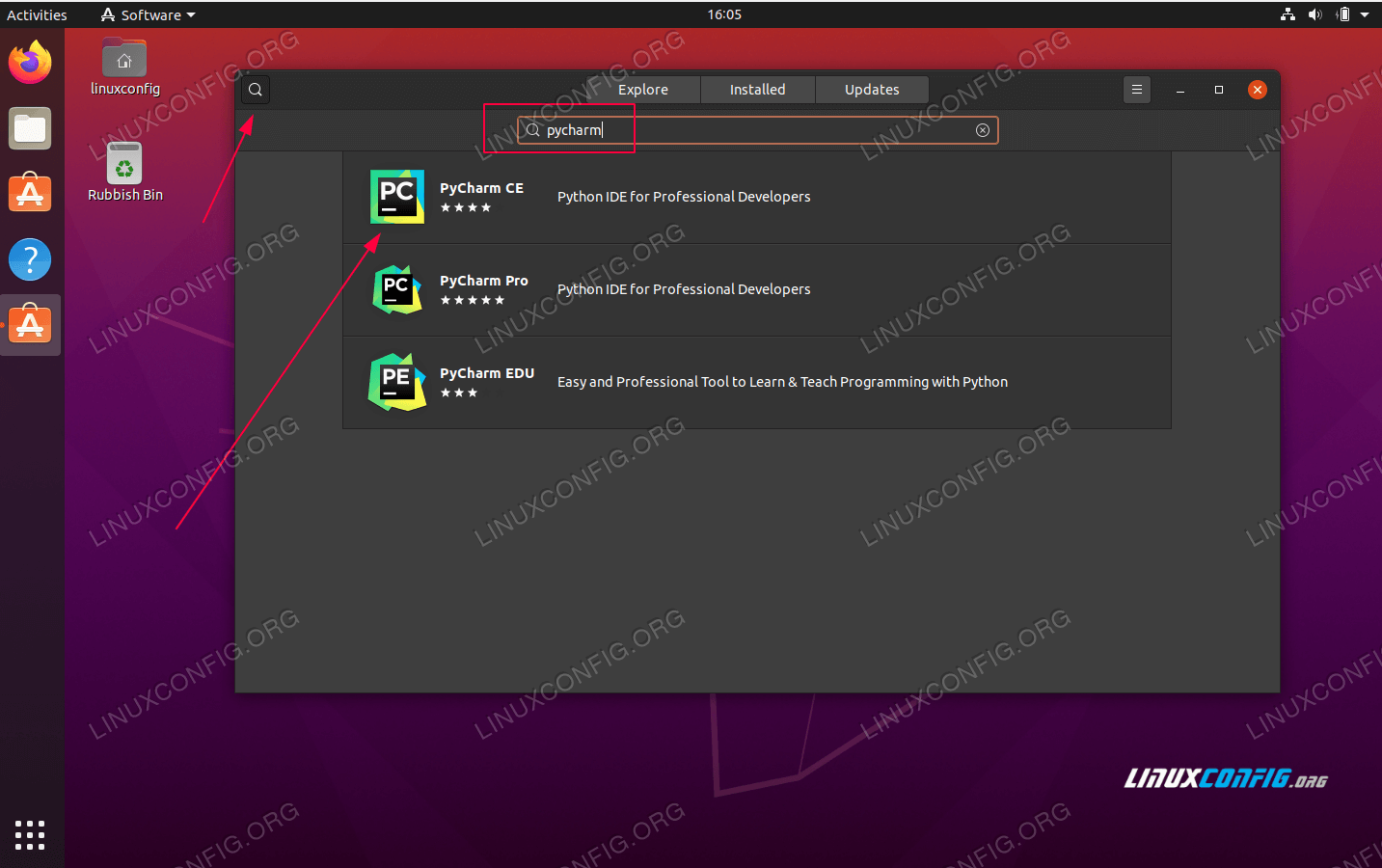

- #How to use pycharm with anaconda software

- #How to use pycharm with anaconda code

Go to „environments“ and then press the plus button: In order to use Python 3.5 you have to create a new Python environment. Open the application, you will be presented a screen like follows: 1.2 Create Python 3.5 Environment Therefore we have to create a new Python environment in Anaconda which uses Python 3.5: 1.1 Open Anaconda NavigatorĪnaconda provides a graphical frontend called Anaconda Navigator for managing Python packages and Python environment. Unfortunately PySpark currently does not support Python 3.6, this will be fixed soon in a Spark 2.1.1 and 2.2.0 (see this issue). Since nowadays most packages are available for Python3, I always highly recommend to prefer Python3 over Python2.

#How to use pycharm with anaconda install

Specifically I chose to install Anaconda3, which comes with Python 3.6 (instead of Anaconda 2, which comes with version 2.7 from the previous Python mainline). I chose the Python distribution Anaconda, because it comes with high quality packages and lots of precompiled native libraries (which otherwise can be non-trivial to build on Windows).

#How to use pycharm with anaconda windows 10

To make things a little bit more difficult, I chose to get everything installed on Windows 10 – Linux is not much different, although a little bit easier. So I will try to explain all required steps to get P圜harm as the (arguably) best Python IDE working with Spark as the (not-arguably) best big data processing tool in the Hadoop ecosystem. Therefore it’s not completely trivial to get PySpark working in P圜harm – but it’s worth the effort for serious PySpark development! But PySpark is not a native Python program, it merely is an excellent wrapper around Spark which in turn runs on the JVM. This is where P圜harm from the great people at JetBrains come into play. So what’s missing? A wonderful IDE to work with.

Spark is a wonderful tool for working with Big Data in the Hadoop world, Python is a wonderful scripting language. This is actually what I want to write about in this article.

#How to use pycharm with anaconda software

The other option is a more traditional (for software development) workflow, which uses an IDE and creates a complete program, which is then run. You could do that on the command line, but Jupyter Notebooks offer a much better experience. When you are working with Python, you have two different options for development: Either use Pythons REPL (Read-Execute-Print-Loop) interface for interactive development. These teams also build complex data processing chains with PySpark. I also know of software development teams which already know Python very well and try to avoid to learn Scala in order to focus on data processing and not on learning a new language.

#How to use pycharm with anaconda code

The integration of Python with Spark allows me to mix Spark code to process huge amounts of data with other powerful Python frameworks like Numpy, Pandas and of course Matplotlib. Fortunately Spark comes out-of-the-box with high quality interfaces to both Python and R, so you can use Spark to process really huge datasets inside the programming language of your choice (that is, if Python or R is the programming language of your choice, of course). But on the other hand, people who are deeply into data analytics often feel more comfortable with simpler languages like Python or dedicated statistical languages like R.

Initially only Scala and Java bindings were available for Spark, since it is implemented in Scala itself and runs on the JVM. Currently Apache Spark with its bindings PySpark and SparkR is the processing tool of choice in the Hadoop Environment.

0 kommentar(er)

0 kommentar(er)